Not So Strict Transport Security

Background#

HTTP Strict Transport Security (HSTS) is reasonably easy to understand: how it works, how it doesn’t work, and when to use it. It seems simple enough; add a Strict-Transport-Security HTTP response header, with appropriate settings, to your website. Those lazy users that enter your website’s address without prefixing it with https:// (i.e. virtually everyone) will be automatically redirected to your website’s HTTPS version. Your users are still vulnerable when they’re visiting your site for the first time, but apart from that, it seems pretty solid.

(If you’re not up to speed with HSTS, you could go straight to the specification, or for a more gentle approach, Mozilla’s Developer Network has a good overview, as does Wikipedia.)

The TL;DR: HSTS headers only apply to the exact domain (and optionally its subdomains) the HSTS response header belongs to. This behaviour can sometimes interact with HTTP redirections in interesting ways, causing HSTS to not be applied when you might expect it to be. A scan of a range of websites shows a number of websites vulnerable to the problem.

The Situation#

A few weeks ago, I was performing a pentest on a web application. In regards to its use of secure transports, it seemed to tick the boxes:

✅ Enforces the use of HTTPS by redirecting all HTTP requests to their HTTPS equivalents

✅ Uses TLS and not SSL, with strong ciphersuites

✅ Uses OCSP stapling

✅ Certificate uses SHA-2

✅ Supports session caching, but not session tickets (for Perfect Forward Secrecy)

✅ Finally, it uses HSTS, with a long max-age and the includeSubDomains flag, on all HTTPS responses.

I made a note of my findings and moved on. I opened a new Chrome window and entered the web application’s domain name in the address bar. I too was “lazy”: I didn’t enter the https:// prefix, but HSTS should handle that for me, right?

Hold on - then why was I seeing HTTP traffic to the client’s webserver? Wireshark confirmed that Chrome was sending a request to the webserver over HTTP before moving to HTTPS. But shouldn’t HSTS should be preventing this?

Before I describe the issue that lead to this behaviour, let’s take a look at a relevant aspect that’s common to many websites.

Canonical Domain Names#

Many websites have a canonical domain name. This is the exact domain name that the website prefers to use to refer to itself. It’s usually the organisation’s apex (root) domain (example.com) or an immediate subdomain, most popularly www (www.example.com). These preferred domain names are implemented by redirecting users visiting the website using the “wrong” domain name to the canonical one.

For example, take a look at Youtube. Looking at an HTTP response to a request for http://youtube.com/, you can see it redirects to http://www.youtube.com/:

$ curl -v 'http://youtube.com/'

(..omitted..)

< HTTP/1.1 301 Moved Permanently

< Date: Sun, 03 May 2015 01:47:45 GMT

* Server gwiseguy/2.0 is not blacklisted

< Server: gwiseguy/2.0

< Location: http://www.youtube.com/

< Content-Length: 0

< Content-Type: text/html

< X-XSS-Protection: 1; mode=block

< Alternate-Protocol: 80:quic,p=1

Looking at the canonical domain names of the top 10,000 sites as ranked by Alexa:

- 61% use a

wwwsubdomain. - 16% don’t canonicalise domain names at all: they don’t perform such redirections.

- 13% use an apex domain name.

- 3% use some other subdomain.

(The remaining 7% of sites are “other” cases: they only have one or the other entry in their DNS, do some weirder form of redirection, or couldn’t be reached at all.)

Even though the trend is to use a www subdomain, there are plenty of well-known websites that prefer their apex domain names, such as Twitter, Github, Instagram and Stack Overflow.

HSTS and Redirections#

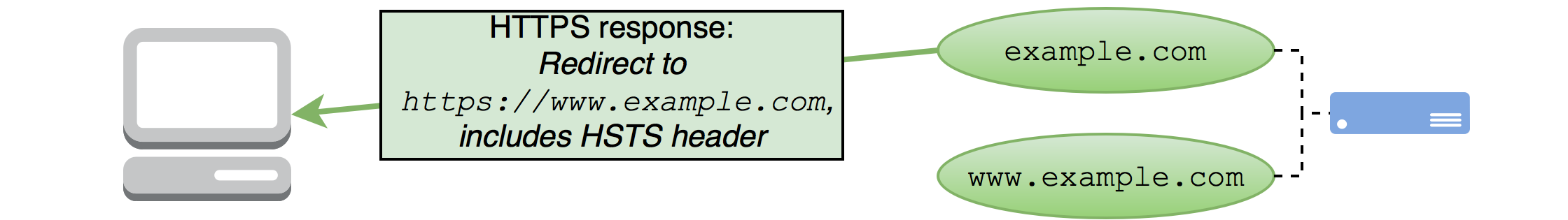

With that aside out of the way, let’s figure out why my client’s site doesn’t seem to be HSTSing correctly. Since a key part of the issue relies on the use of HTTP versus HTTPS, I’ve colour-coded the messages and URLs that were used in the requests and responses given below. HTTP is highlighted with red, while HTTPS is highlighted green.

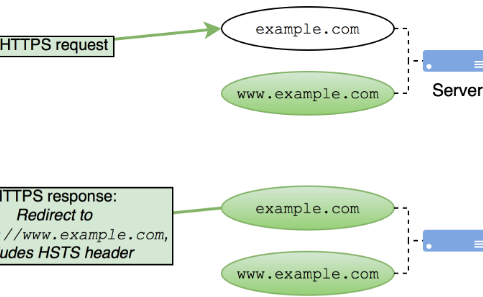

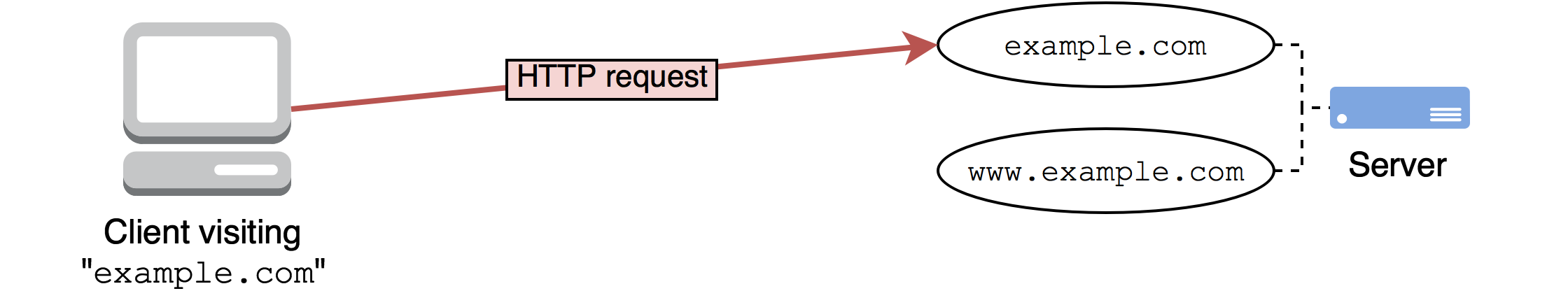

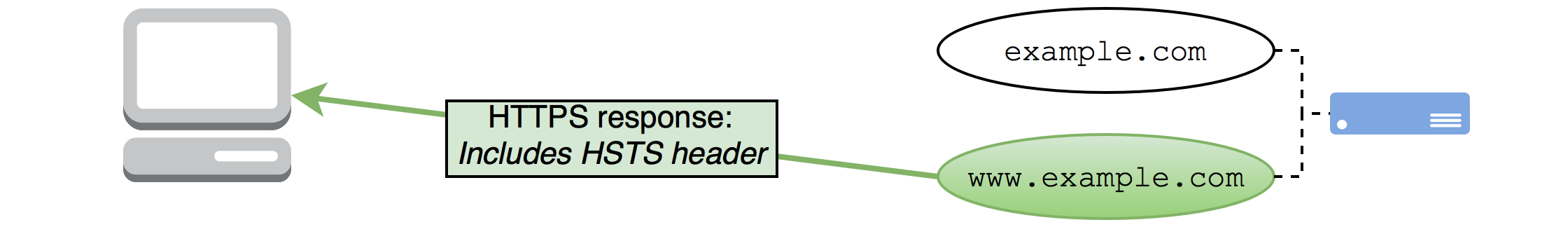

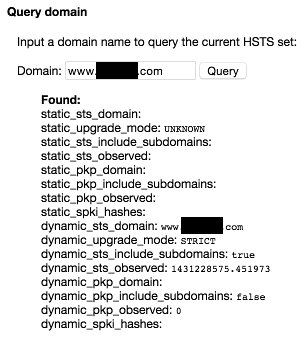

First, after entering example.com in Chrome’s address bar and hitting ENTER, it makes the inital request to the server over HTTP:

The server responds with a redirect to the equivalent HTTPS version of the URL requested:

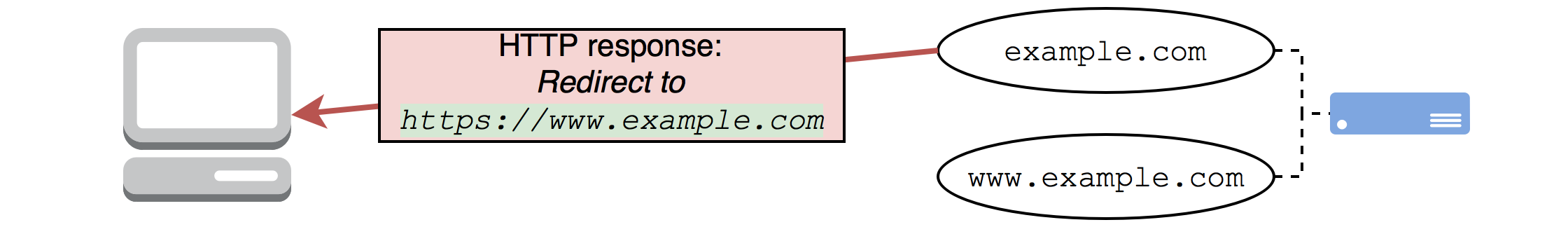

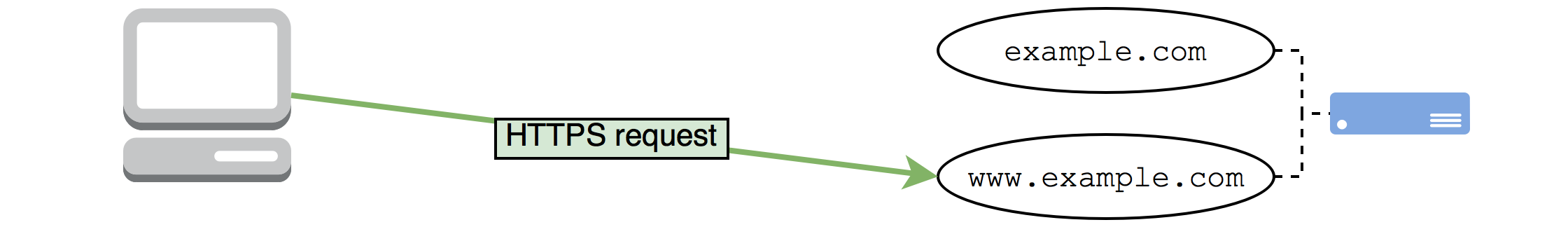

Chrome dutifully follows the redirection to https://www.example.com/, where it essentially resends its previous request, this time over HTTPS:

The response has a HSTS header:

So, what’s the net effect of these requests and responses?

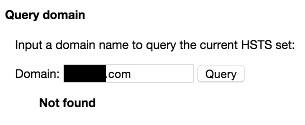

Well, the www.example.com domain has been marked as requiring the use of HTTPS thanks to the Strict-Transport-Security header in the last response, along with all its subdomains. But what about example.com? If we re-enter example.com in Chrome’s address bar and hit ENTER again, we see that the first request is still being sent out over HTTP. We can also see this by looking at Chrome’s built-in HSTS debug functionality, available at chrome://net-internals/#hsts. example.com is not in the browser’s HSTS set:

However, www.example.com is:

So, a HSTS policy has been set on www.example.com, but not example.com.

Looking at the requests and responses directly, you can see why. The client’s website uses www.example.com as its canonical domain name, and hence http://example.com has been redirected to https://www.example.com. This is a redirection across protocols (HTTP to HTTPS) and subdomains (apex domain to www subdomain) in one single step. The browser never gets the chance to recieve an HSTS header in a response for something on the example.com domain, only www.example.com. Hence, the example.com domain is never marked as requiring HSTS.

OK, not great. But perhaps this was somehow intentional? Let’s look at what response we get when we explicitly request https://example.com, the HTTPS version of the domain that didn’t get HSTS applied to it:

We can see there’s a Strict-Transport-Security header in the response. Also, looking at Chrome’s HSTS debug screen shows that example.com is now marked as requiring HSTS.

So, it looks like the website intends for HSTS to be uniformly applied to both example.com and www.example.com, but that’s not what’s happening. The problem is that the website is redirecting off of the example.com domain before HSTS can be applied to it over a HTTPS connection.

What about starting the navigation from http://www.example.com? Well, that simply gets redirected to https://www.example.com, where HSTS is applied to www.example.com. Once again, however, this only applies to www.example.com, and not example.com.

So, we can summarize the results as follows:

| Visitor's address bar input | HSTS applied to example.com? | HSTS applied to www.example.com? | Future visits with same address bar input will use HTTPS? |

|---|---|---|---|

example.com | No | Yes | No |

www.example.com | No | Yes | Yes |

http://example.com | No | Yes | No |

https://example.com | Yes | Yes | Yes |

http://www.example.com | No | Yes | Yes |

https://www.example.com | No | Yes | Yes |

The table above shows that unless the user explicitly goes to www.example.com or specifies the https:// prefix, all of their future visits will have at least one request going over HTTP! In effect, HSTS is not being consistently applied, even though the website administrator thinks it should be.

Web-scale!#

After seeing this problem on a client’s site, I was curious as to how common it was in general. While the problem is not a new one, I decided to write a Python-based scanner that would find other sites with the same problem, creatively named hsts-scanner. (As a side note: during the development of the scanner, I ran across a vulnerability in Requests, a Python HTTP library I made use of. It seems that redirections are an “edge case” in more than just HSTS.)

The scanner flags generic failures in a site’s implementation of HSTS, not just exact cases of missing headers or cross-domain, cross-protocol redirections. This is done by having the scanner run the sites through an internal client-side implementation of the HSTS policy, just like a normal browser would. This lets it handle the wide range of ways people can mess up HSTS without having to code for each of them specifically.

The scanner's pretty status graph

I ran the scanner on Alexa’s top million websites, and found that 8,500 sites are vulnerable to this issue exactly as described here, or a variant of it which leads to the same effect. That is, visits to the site traverse through at least one domain that doesn’t get HSTS applied to it, even though the final domain you end up on does get HSTS set.

However, there’s a catch: some browsers cache certain kinds of redirections, and hence the type of redirection that causes the missing HSTS policies is important too. Different browsers have different policies on whether or not they cache a given redirection. These policies depend on the redirection type (301, 302, 303), any caching headers explicitly specified by the server in the response, and whether or not cookies are in use (and whether or not they vary over time!). This table over at Steve Souder’s site shows how fragmented each browser’s policies are from each other.

Hence, the scanner needs to take into account that not all domains in an HTTP redirection chain will actually be hit again in future requests, depending on whether or not the browser caches the redirection. With this in mind, the number of sites that are vulnerable in practice (when visited with Chrome, Firefox or Safari) lowers to 4,844.

It’s also for this reason that the best way to test if your site is vulnerable or not is to actually try it in a real browser!

Some sites that are (or were!) vulnerable include:

- Wireshark

- GnuPG

- Outlook.com, through its old Hotmail addresses

- Qualys’ SSL Labs

- NCC Group

- Cyveillance

- Startmail

In addition, there are sites that are similarly misconfigured but are in the preloaded HSTS set, stopping them from being actually vulnerable in the real world. For example, this includes Tor and Shodan.

After privately disclosing the issue to popular websites that were affected, around half of the websites applied a fix.

Some other factoids uncovered by the scanner:

6,838 sites sent a HSTS header in a HTTP response (as opposed to HTTPS). This is an indication that HSTS policy has been “cargo culted” into webserver configuration, or just plain misconfigured.

Only 5,788 sites have set a HSTS policy that is all-encompassing. For these sites, no matter whether a user starts with a canonical domain or some other “other” domain, all the domains get a HSTS policy set on them.

Conclusions#

Be careful with your redirections and HSTS!#

If you never send a response with a HSTS header for a specific domain, and it’s not otherwise covered by another header with the includeSubDomains flag, it won’t be getting HSTS - even if other domains in the redirection chain are! This sounds obvious when stated directly, but the results show that many sites are in such a rush to redirect you to their canonical domain that HSTS is not applied uniformly.

Consider creating a resource on your apex domain that can be used to uniformly apply HSTS to your entire domain tree.#

This means, for example, including a reference on all your website’s pages to a blank 1x1 image that is hosted on your apex domain. The response headers for this image include a HSTS header with the includeSubDomains flag set. This way, no matter what domain your users start on and end on, they’ll have HSTS applied consistently to the entire domain after the response from the 1x1 image is received.

For example, this is what Tumblr does. Go to tumblr.com and take a look at their page source. You’ll find the following hidden away in there:

<iframe src="https://tumblr.com/hsts" height="0" width="0" style="visibility:hidden;"></iframe>

Take a look at the HTTP response headers for this iframe’s target URL, and you’ll see:

$ curl -v 'https://tumblr.com/hsts'

* Hostname was NOT found in DNS cache

* Trying 66.6.41.30

* Connected to tumblr.com (66.6.41.30) port 443 (#0)

* TLS 1.2 connection using TLS_DHE_RSA_WITH_AES_256_CBC_SHA256

* Server certificate: www.tumblr.com

* Server certificate: GeoTrust SSL CA - G3

* Server certificate: GeoTrust Global CA

> GET /hsts HTTP/1.1

> User-Agent: curl/7.37.1

> Host: tumblr.com

> Accept: */*

< HTTP/1.1 204 No Content

* Server nginx is not blacklisted

< Server: nginx

< Date: Thu, 28 May 2015 23:25:11 GMT

< Connection: keep-alive

< Strict-Transport-Security: max-age=15552001

<

* Connection #0 to host tumblr.com left intact

This way, even though Tumblr’s canonical domain uses a www subdomain (www.tumblr.com), HSTS will applied to the apex domain (and its subdomains) because of the inclusion of a HSTS-setting resource (a page in a hidden iframe) that is from the tumblr.com apex domain.

The most important takeaway: actually test your HSTS policy!#

Try navigating to your website with a cache- and HSTS-cleared browser from all possible domains, and look at the resulting policy that is applied. You might be surprised.

Thanks to Ivan Ristic for providing thoughts on this topic, as well as all the websites that responded and applied fixes to their HSTS policies.

Disclaimer#

The information in this article is provided for research and educational purposes only. Aura Information Security does not accept any liability in any form for any direct or indirect damages resulting from the use of or reliance on the information contained in this article.